State Space Models (SSMs): An Alt to Transformers? SSMs are not something new; they’ve been successfully applied in many fields like control systems, physics, economics… For now, transformers are the kings in sequence modelling. But recently, some researchers and startups are starting to use a specific type of SSM to solve some of the sequence modelling issues that Transformers suffer from. SSMs as an Alt to Transformers? Let’s see:

First, let me share 3 nice intros to SSMs:

-

A gentle introduction to SSMs. In this post, jorgecadete explains SSMs from a basic point of view. By the end of this post, you will not be an expert, but at least you will have a robust idea about why they are a fundamental concept in ML.

-

Hugging Face introduction to SSMs. There are many types of SSMs. In the context of DL, when we speak of SSMs, we are referring to a subset of existing representations, namely linear invariant (or stationary) systems.

-

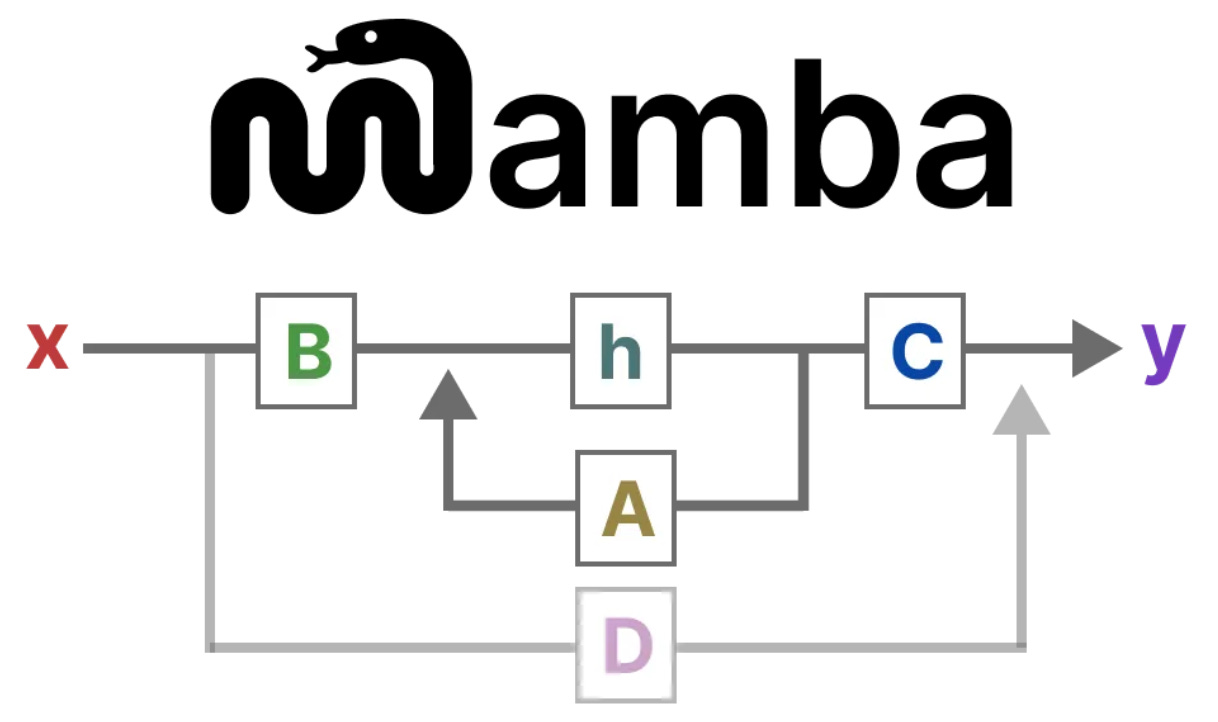

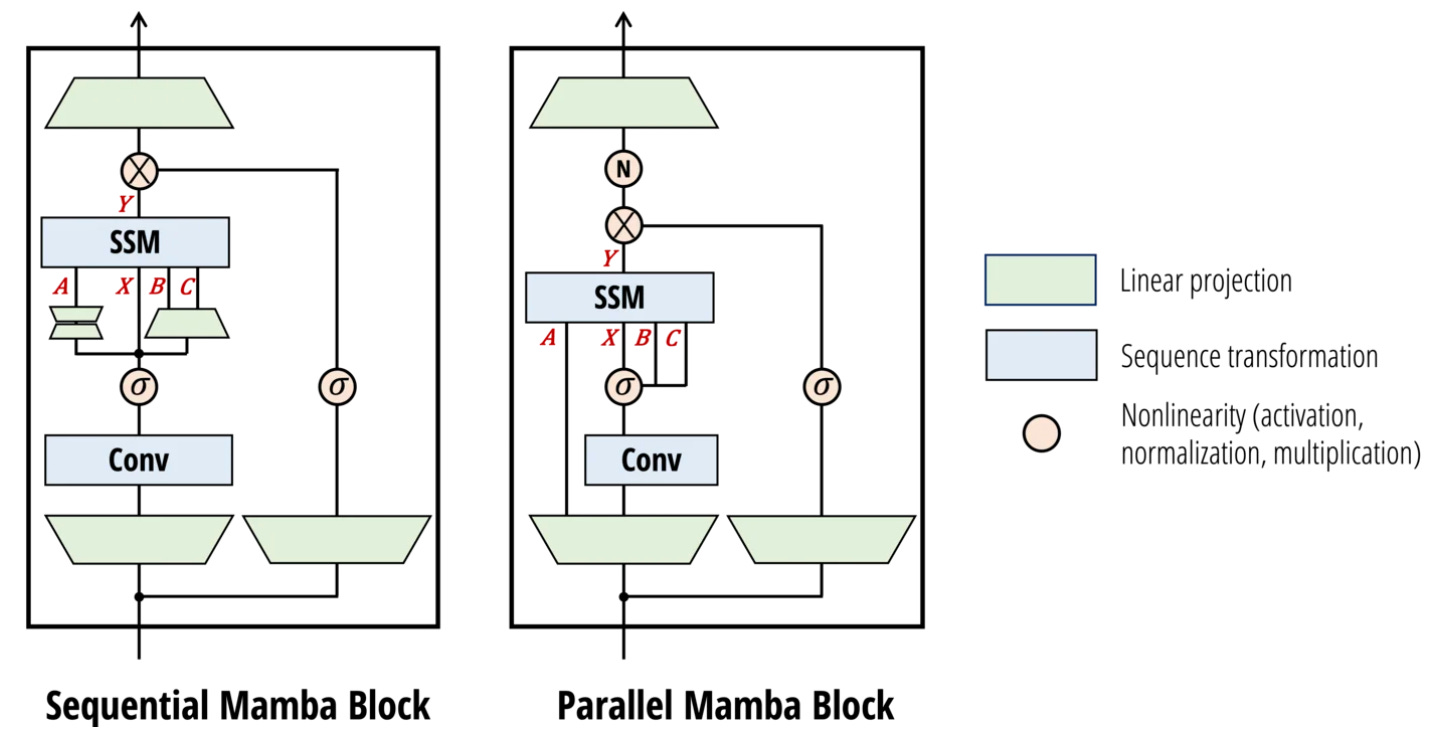

SSMs and a visual guide to Mamba. This is such a beautiful, clearly structured post! Maaten introduces SSMs in the context of LMs and explores concepts one by one to develop an intuition about the field. Then, he covers how Mamba might challenge the Transformers architecture. Brilliant!

SSMs and the new Mamba-2. The Mamba paper (v2 May 2024) was indeed a breakthrough. Now the new Mamba-2 is out. Albert and Tri, the original devs of Mamba, just posted a fantastic blog series that covers the model, the theory, the algo, and the systems behind Mamba-2. Blogpost: State Space Duality (Mamba-2) Part I-IV

SSMs as an alternative to Transformers. This is a recent, fully comprehensive survey on SSMs by researchers at MSR. Although Transformers dominate sequence modelling tasks, they suffer from attention complexity and handling inductive bias for long sequences. SSMs have emerged as a promising alt for sequence modelling paradigms. Read the survey here: Mamba-360: Survey of State Space Models as Transformer Alternative for Long Sequence Modelling: Methods, Applications, and Challenges.

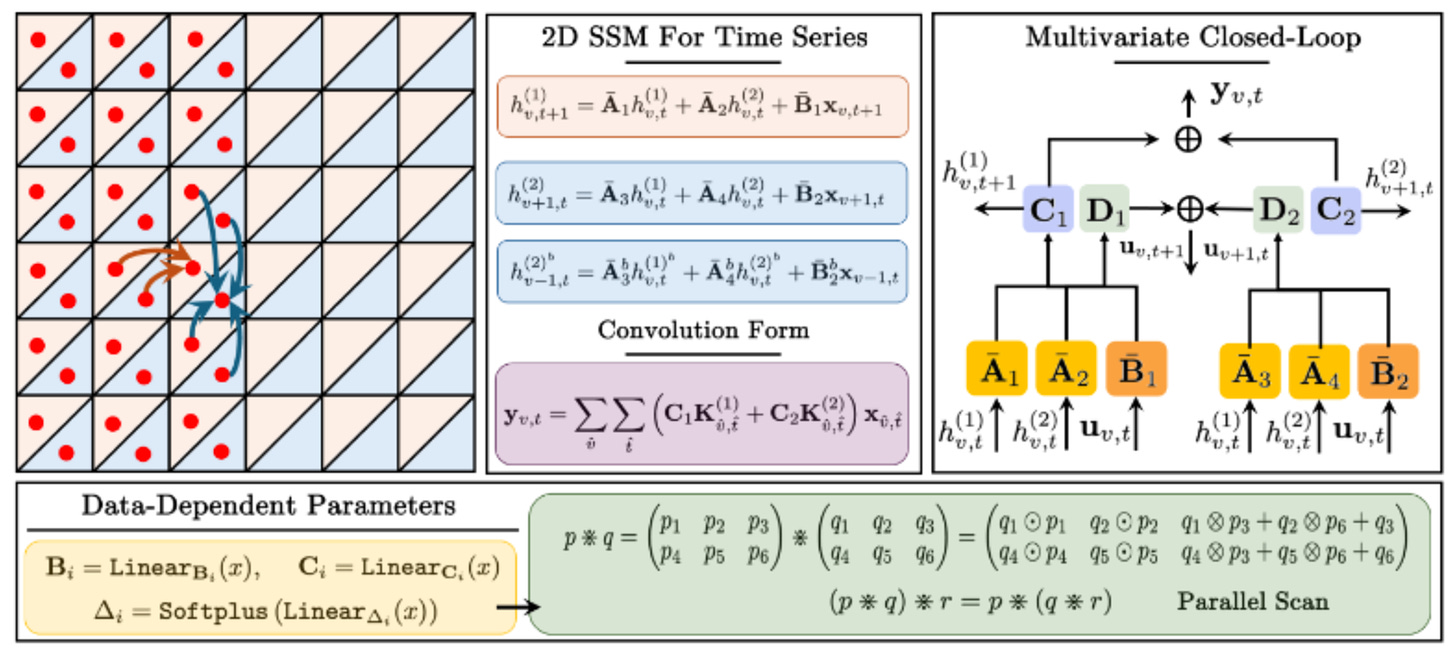

SSMs for time-series forecasting & anomaly detection. A few days ago researchers at Cornell & NYU introduced Chimera: A new 2-dim SSM, that shows superior performance on extensive and diverse benchmarks, including ECG and speech time series classification, long-term and short-term time series forecasting, and time series anomaly detection. Paper: Chimera: Effectively Modeling Multivariate Time Series with 2-Dimensional State Space Models.

SSMs for real-time voice & speech generation. Last week, Cartesian.ai – a startup specialised in multimodal intelligence in any device- announced Sonic, a super-low latency voice generation model that uses SSMs. The Chief Scientist at Sonic was one of the original developers of S4-Structured State Space Sequence model and Mamba. In this blogpost he explains why an SSM inference stack enables low latency and high throughput for voice generation. Announcing Sonic: A Low-Latency Voice Model for Lifelike Speech

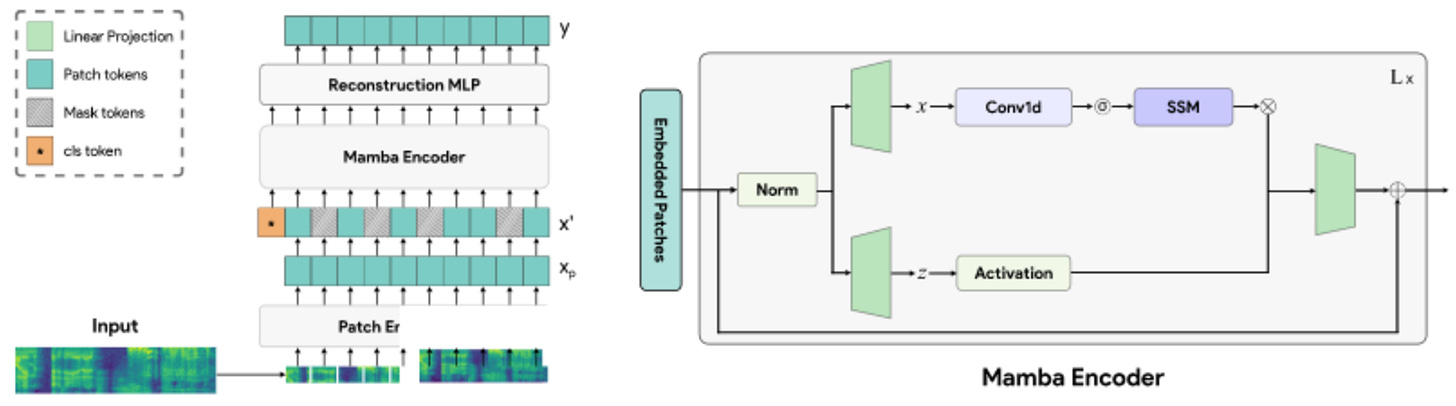

SSMs for audio. A new paper introducing Audio Mamba, an SSM for learning general-purpose audio representations from randomly masked spectrogram patches through self-supervision. The researchers claim that Audio Mamba consistently outperforms comparable self-supervised audio spectrogram transformer (SSAST) baselines by a considerable margin. Paper: Audio Mamba: Selective State Spaces for Self-Supervised Audio Representations.

A contrarian view to SSMs as an alt to Transformers. Two days ago, researchers at AllenAI & NYU, published the latest update of a paper downplaying SSMs as an alt to Transformers. “Do SSMs truly have an advantage over transformers in expressive power for state tracking? Surprisingly, the answer is no.” The researchers claim that SSMs have similar limitations to non-recurrent models like transformers, which may fundamentally limit their ability to solve real-world state-tracking problems. Paper: The Illusion of State in State-Space Models.

Bonus: Mamba in NumPy. mamba.np is a new, pure NumPy implementation of Mamba

Have a nice week.

-

Lessons Learned: AI Agents is Not All you Need

-

How We Use AI in Software Engineering at Google

-

A New Way to Extract Interpretable Features from GPT-4

-

What is AI Mechanistic Interpretability? An Intro by Anthropic

-

Hello Qwen2 Family? The New SOTA in Open Source LMs

-

Generative Recommenders: A New, Powerful Paradigm for RecSys

-

Hugging Face LeRobot: A New, SOTA E2E for Real-world Robotics

-

Nomic Embed Vision & Opensource Multi-Modal Embedding Models

-

A Picture is Worth 170 Tokens: How Does GPT-4o Encode Images?

-

A Super Detailed Guide to implement a QnABot on AWS (June 2024)

-

Apple MLX-graphs – A Fast, Scalable Opensource Lib for GraphNNs

-

The Cohere AI Agents Cookbook – A Collection of 10 iPynbs

-

LitGPT- Pretrain, finetune, evaluate, and deploy 20+ LLMs on your own data with SOTA Techniques

-

The Tensor Calculus You Need for Deep Learning

-

[lecture] Statistical Physics of Machine Learning, May 2024

-

PMLR Vol. 241 Machine Learning with Imbalanced Domains

-

[trending] Scalable MatMul-free Language Modelling (paper & repo)

-

Buffer of Thoughts: Thought-Augmented Reasoning with LLMs

-

GraphAny: A Foundation Model for Node Classification on Any Graph

-

Testing Models In Production Using Interleaving Experiments

-

The LLM Journey: From POC to Production in the Real-World

-

Adapting MLflow to Automate Building, Training, & Validation of Models

-

Zyda: 1.3T Trillion-token Open Dataset for Language Modelling

-

MovieLens Belief 2024 Dataset for Movie Recommendations

-

Solos: A Dataset for Audio-Visual Music Analysis – Experiments

Tips? Suggestions? Feedback? email Carlos

Curated by @ds_ldn in the middle of the night.