Diffusion models are a new class of state-of-the-art generative models that generate diverse high-resolution images. They have already attracted a lot of attention after OpenAI, Nvidia and Google managed to train large-scale models. Example architectures that are based on diffusion models are GLIDE, DALLE-2, Imagen, and the full open-source stable diffusion.

But what is the main principle behind them?

In this blog post, we will dig our way up from the basic principles. There are already a bunch of different diffusion-based architectures. We will focus on the most prominent one, which is the Denoising Diffusion Probabilistic Models (DDPM) as initialized by Sohl-Dickstein et al and then proposed by Ho. et al 2020. Various other approaches will be discussed to a smaller extent such as stable diffusion and score-based models.

Diffusion models are fundamentally different from all the previous generative methods. Intuitively, they aim to decompose the image generation process (sampling) in many small “denoising” steps.

The intuition behind this is that the model can correct itself over these small steps and gradually produce a good sample. To some extent, this idea of refining the representation has already been used in models like alphafold. But hey, nothing comes at zero-cost. This iterative process makes them slow at sampling, at least compared to GANs.

Diffusion process

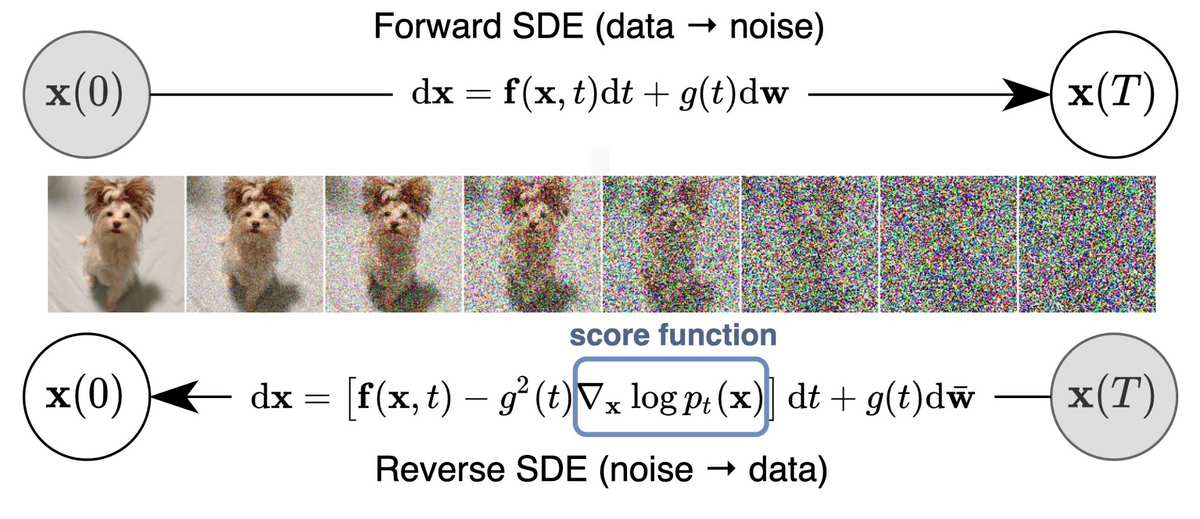

The basic idea behind diffusion models is rather simple. They take the input image and gradually add Gaussian noise to it through a series of steps. We will call this the forward process. Notably, this is unrelated to the forward pass of a neural network. If you’d like, this part is necessary to generate the targets for our neural network (the image after applying noise steps).

Afterward, a neural network is trained to recover the original data by reversing the noising process. By being able to model the reverse process, we can generate new data. This is the so-called reverse diffusion process or, in general, the sampling process of a generative model.

How? Let’s dive into the math to make it crystal clear.

Forward diffusion

Diffusion models can be seen as latent variable models. Latent means that we are referring to a hidden continuous feature space. In such a way, they may look similar to variational autoencoders (VAEs).

In practice, they are formulated using a Markov chain of steps. Here, a Markov chain means that each step only depends on the previous one, which is a mild assumption. Importantly, we are not constrained to using a specific type of neural network, unlike flow-based models.

Given a data-point sampled from the real data distribution ( ), one can define a forward diffusion process by adding noise. Specifically, at each step of the Markov chain we add Gaussian noise with variance to , producing a new latent variable with distribution . This diffusion process can be formulated as follows:

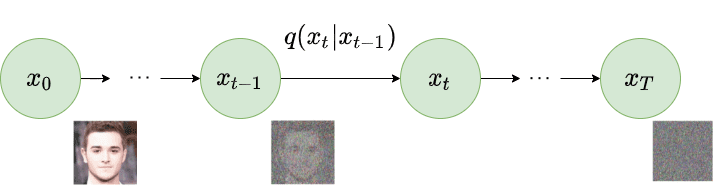

Forward diffusion process. Image modified by Ho et al. 2020

Since we are in the multi-dimensional scenario is the identity matrix, indicating that each dimension has the same standard deviation . Note that is still a normal distribution, defined by the mean and the variance where and . will always be a diagonal matrix of variances (here )

Thus, we can go in a closed form from the input data to in a tractable way. Mathematically, this is the posterior probability and is defined as:

The symbol in states that we apply repeatedly from timestep to . It’s also called trajectory.

So far, so good? Well, nah! For timestep we need to apply 500 times in order to sample . Can’t we really do better?

The reparametrization trick provides a magic remedy to this.

The reparameterization trick: tractable closed-form sampling at any timestep

If we define , where , one can use the reparameterization trick in a recursive manner to prove that:

Note: Since all timestep have the same Gaussian noise we will only use the symbol from now on.

Thus to produce a sample we can use the following distribution:

Since is a hyperparameter, we can precompute and for all timesteps. This means that we sample noise at any timestep and get in one go. Hence, we can sample our latent variable at any arbitrary timestep. This will be our target later on to calculate our tractable objective loss .

Variance schedule

The variance parameter can be fixed to a constant or chosen as a schedule over the timesteps. In fact, one can define a variance schedule, which can be linear, quadratic, cosine etc. The original DDPM authors utilized a linear schedule increasing from to . Nichol et al. 2021 showed that employing a cosine schedule works even better.

Latent samples from linear (top) and cosine (bottom)

schedules respectively. Source: Nichol & Dhariwal 2021

Reverse diffusion

As , the latent is nearly an isotropic Gaussian distribution. Therefore if we manage to learn the reverse distribution , we can sample from , run the reverse process and acquire a sample from , generating a novel data point from the original data distribution.

The question is how we can model the reverse diffusion process.

Approximating the reverse process with a neural network

In practical terms, we don’t know . It’s intractable since statistical estimates of require computations involving the data distribution.

Instead, we approximate with a parameterized model (e.g. a neural network). Since will also be Gaussian, for small enough , we can choose to be Gaussian and just parameterize the mean and variance:

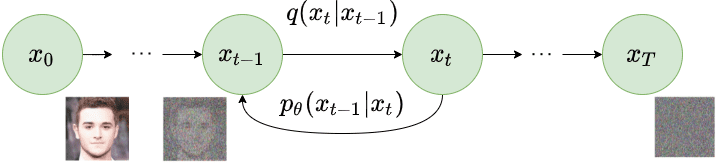

Reverse diffusion process. Image modified by Ho et al. 2020

If we apply the reverse formula for all timesteps (, also called trajectory), we can go from to the data distribution:

By additionally conditioning the model on timestep , it will learn to predict the Gaussian parameters (meaning the mean and the covariance matrix ) for each timestep.

But how do we train such a model?

Training a diffusion model

If we take a step back, we can notice that the combination of and is very similar to a variational autoencoder (VAE). Thus, we can train it by optimizing the negative log-likelihood of the training data. After a series of calculations, which we won’t analyze here, we can write the evidence lower bound (ELBO) as follows:

Let’s analyze these terms:

-

The term can been as a reconstruction term, similar to the one in the ELBO of a variational autoencoder. In Ho et al 2020 , this term is learned using a separate decoder.

-

shows how close is to the standard Gaussian. Note that the entire term has no trainable parameters so it’s ignored during training.

-

The third term , also referred as , formulate the difference between the desired denoising steps and the approximated ones .

It is evident that through the ELBO, maximizing the likelihood boils down to learning the denoising steps .

Important note: Even though is intractable Sohl-Dickstein et al illustrated that by additionally conditioning on makes it tractable.

Intuitively, a painter (our generative model) needs a reference image () to slowly draw (reverse diffusion step ) an image. Thus, we can take a small step backwards, meaning from noise to generate an image, if and only if we have as a reference.

In other words, we can sample at noise level conditioned on . Since and , we can prove that:

Note that and depend only on , so they can be precomputed.

This little trick provides us with a fully tractable ELBO. The above property has one more important side effect, as we already saw in the reparameterization trick, we can represent as

where .

By combining the last two equations, each timestep will now have a mean (our target) that only depends on :

Therefore we can use a neural network to approximate and consequently the mean:

Thus, the loss function (the denoising term in the ELBO) can be expressed as:

This effectively shows us that instead of predicting the mean of the distribution, the model will predict the noise at each timestep .

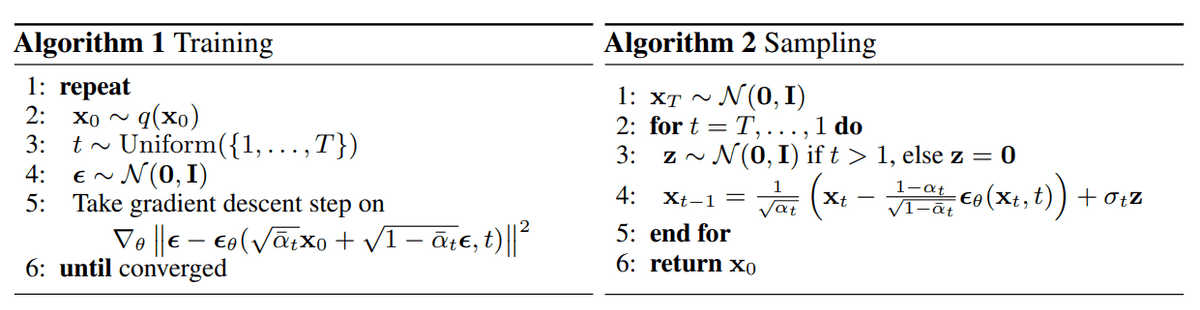

Ho et.al 2020 made a few simplifications to the actual loss term as they ignore a weighting term. The simplified version outperforms the full objective:

The authors found that optimizing the above objective works better than optimizing the original ELBO. The proof for both equations can be found in this excellent post by Lillian Weng or in Luo et al. 2022.

Additionally, Ho et. al 2020 decide to keep the variance fixed and have the network learn only the mean. This was later improved by Nichol et al. 2021, who decide to let the network learn the covariance matrix as well (by modifying ), achieving better results.

Training and sampling algorithms of DDPMs. Source: Ho et al. 2020

Architecture

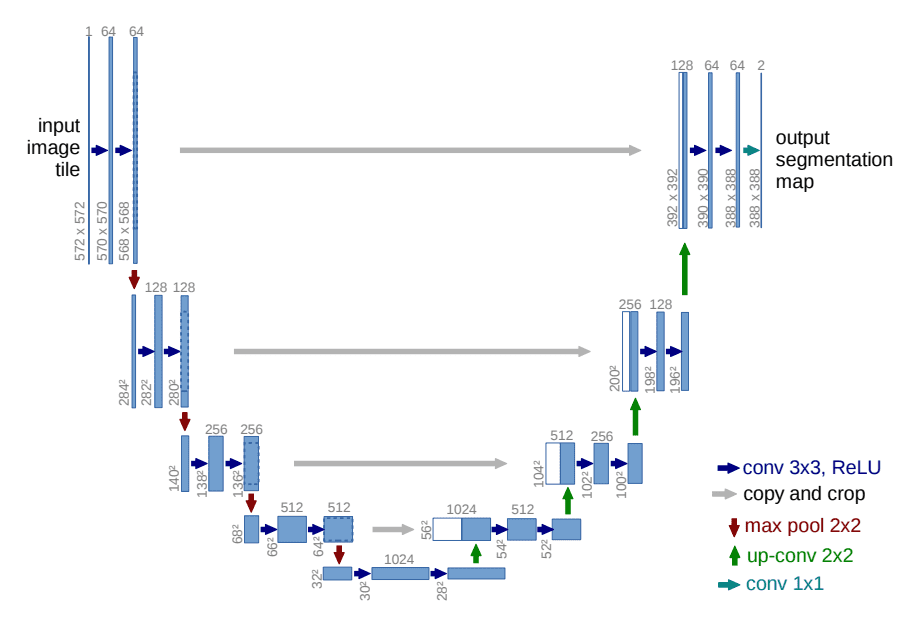

One thing that we haven’t mentioned so far is what the model’s architecture looks like. Notice that the model’s input and output should be of the same size.

To this end, Ho et al. employed a U-Net. If you are unfamiliar with U-Nets, feel free to check out our past article on the major U-Net architectures. In a few words, a U-Net is a symmetric architecture with input and output of the same spatial size that uses skip connections between encoder and decoder blocks of corresponding feature dimension. Usually, the input image is first downsampled and then upsampled until reaching its initial size.

In the original implementation of DDPMs, the U-Net consists of Wide ResNet blocks, group normalization as well as self-attention blocks.

The diffusion timestep is specified by adding a sinusoidal position embedding into each residual block. For more details, feel free to visit the official GitHub repository. For a detailed implementation of the diffusion model, check out this awesome post by Hugging Face.

The U-Net architecture. Source: Ronneberger et al.

Conditional Image Generation: Guided Diffusion

A crucial aspect of image generation is conditioning the sampling process to manipulate the generated samples. Here, this is also referred to as guided diffusion.

There have even been methods that incorporate image embeddings into the diffusion in order to “guide” the generation. Mathematically, guidance refers to conditioning a prior data distribution with a condition , i.e. the class label or an image/text embedding, resulting in .

To turn a diffusion model into a conditional diffusion model, we can add conditioning information at each diffusion step.

The fact that the conditioning is being seen at each timestep may be a good justification for the excellent samples from a text prompt.

In general, guided diffusion models aim to learn . So using the Bayes rule, we can write:

is removed since the gradient operator refers only to , so no gradient for . Moreover remember that .

And by adding a guidance scalar term , we have:

Using this formulation, let’s make a distinction between classifier and classifier-free guidance. Next, we will present two family of methods aiming at injecting label information.

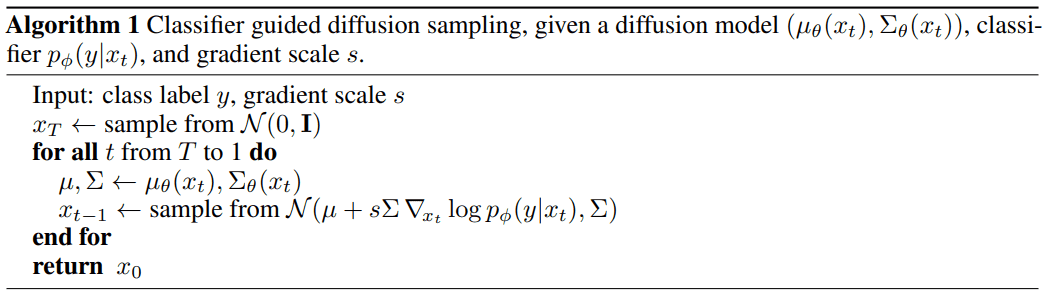

Classifier guidance

Sohl-Dickstein et al. and later Dhariwal and Nichol showed that we can use a second model, a classifier , to guide the diffusion toward the target class during training. To achieve that, we can train a classifier on the noisy image to predict its class . Then we can use the gradients to guide the diffusion. How?

We can build a class-conditional diffusion model with mean and variance .

Since , we can show using the guidance formulation from the previous section that the mean is perturbed by the gradients of of class , resulting in:

In the famous GLIDE paper by Nichol et al, the authors expanded on this idea and use CLIP embeddings to guide the diffusion. CLIP as proposed by Saharia et al., consists of an image encoder and a text encoder . It produces an image and text embeddings and , respectively, wherein is the text caption.

Therefore, we can perturb the gradients with their dot product:

As a result, they manage to “steer” the generation process toward a user-defined text caption.

Algorithm of classifier guided diffusion sampling. Source: Dhariwal & Nichol 2021

Classifier-free guidance

Using the same formulation as before we can define a classifier-free guided diffusion model as:

Guidance can be achieved without a second classifier model as proposed by Ho & Salimans. Instead of training a separate classifier, the authors trained a conditional diffusion model together with an unconditional model . In fact, they use the exact same neural network. During training, they randomly set the class to , so that the model is exposed to both the conditional and unconditional setup:

Note that this can also be used to “inject” text embeddings as we showed in classifier guidance.

This admittedly “weird” process has two major advantages:

-

It uses only a single model to guide the diffusion.

-

It simplifies guidance when conditioning on information that is difficult to predict with a classifier (such as text embeddings).

Imagen as proposed by Saharia et al. relies heavily on classifier-free guidance, as they find that it is a key contributor to generating samples with strong image-text alignment. For more info on the approach of Imagen check out this video from AI Coffee Break with Letitia:

Scaling up diffusion models

You might be asking what is the problem with these models. Well, it’s computationally very expensive to scale these U-nets into high-resolution images. This brings us to two methods for scaling up diffusion models to higher resolutions: cascade diffusion models and latent diffusion models.

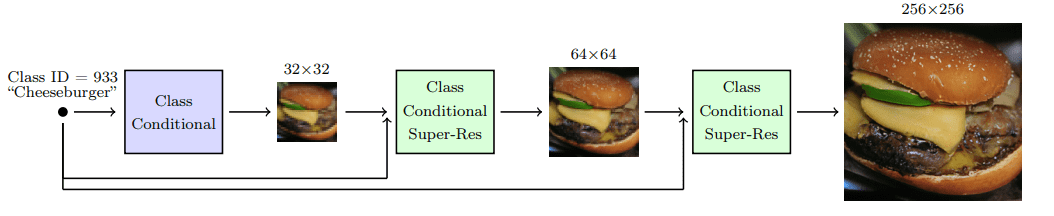

Cascade diffusion models

Ho et al. 2021 introduced cascade diffusion models in an effort to produce high-fidelity images. A cascade diffusion model consists of a pipeline of many sequential diffusion models that generate images of increasing resolution. Each model generates a sample with superior quality than the previous one by successively upsampling the image and adding higher resolution details. To generate an image, we sample sequentially from each diffusion model.

Cascade diffusion model pipeline. Source: Ho & Saharia et al.

To acquire good results with cascaded architectures, strong data augmentations on the input of each super-resolution model are crucial. Why? Because it alleviates compounding error from the previous cascaded models, as well as due to a train-test mismatch.

It was found that gaussian blurring is a critical transformation toward achieving high fidelity. They refer to this technique as conditioning augmentation.

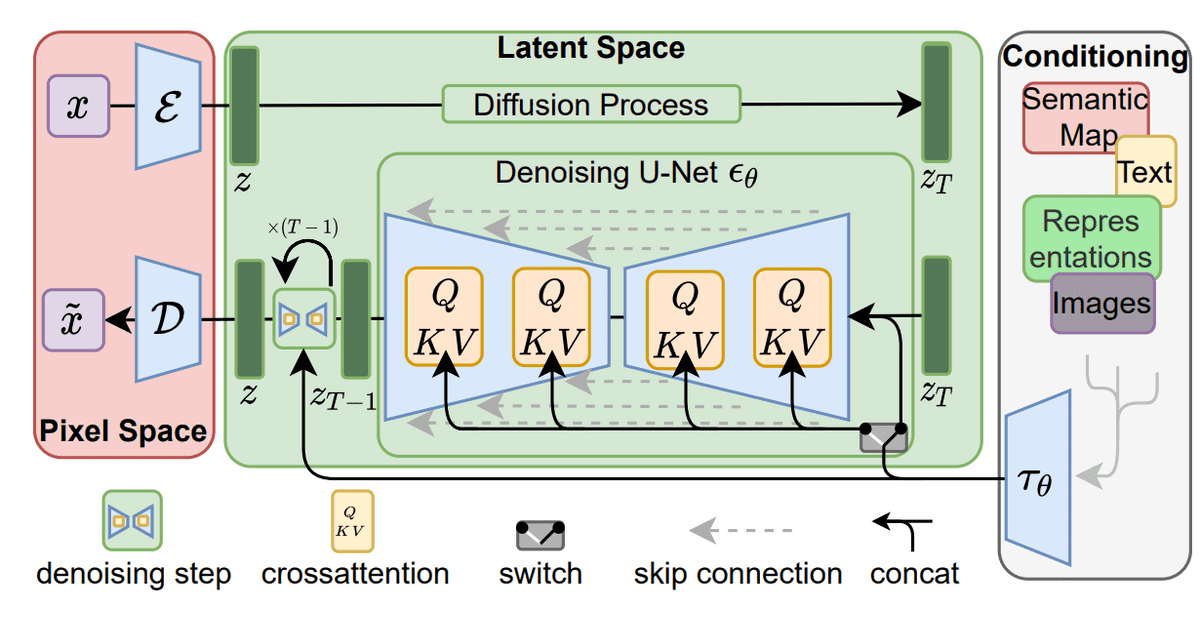

Stable diffusion: Latent diffusion models

Latent diffusion models are based on a rather simple idea: instead of applying the diffusion process directly on a high-dimensional input, we project the input into a smaller latent space and apply the diffusion there.

In more detail, Rombach et al. proposed to use an encoder network to encode the input into a latent representation i.e. . The intuition behind this decision is to lower the computational demands of training diffusion models by processing the input in a lower dimensional space. Afterward, a standard diffusion model (U-Net) is applied to generate new data, which are upsampled by a decoder network.

If the loss for a typical diffusion model (DM) is formulated as:

then given an encoder and a latent representation , the loss for a latent diffusion model (LDM) is:

Latent diffusion models. Source: Rombach et al

For more information check out this video:

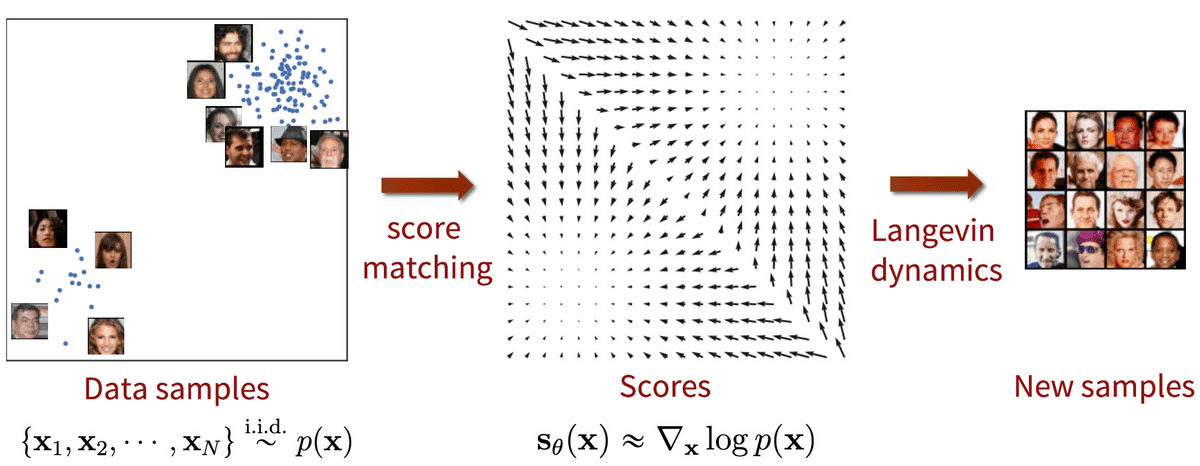

Score-based generative models

Around the same time as the DDPM paper, Song and Ermon proposed a different type of generative model that appears to have many similarities with diffusion models. Score-based models tackle generative learning using score matching and Langevin dynamics.

Score-matching refers to the process of modeling the gradient of the log probability density function, also known as the score function. Langevin dynamics is an iterative process that can draw samples from a distribution using only its score function.

where is the step size.

Suppose that we have a probability density and that we define the score function to be . We can then train a neural network to estimate without estimating first. The training objective can be formulated as follows:

Then by using Langevin dynamics, we can directly sample from using the approximated score function.

In case you missed it, guided diffusion models use this formulation of score-based models as they learn directly . Of course, they don’t rely on Langevin dynamics.

Adding noise to score-based models: Noise Conditional Score Networks (NCSN)

The problem so far: the estimated score functions are usually inaccurate in low-density regions, where few data points are available. As a result, the quality of data sampled using Langevin dynamics is not good.

Their solution was to perturb the data points with noise and train score-based models on the noisy data points instead. As a matter of fact, they used multiple scales of Gaussian noise perturbations.

Thus, adding noise is the key to make both DDPM and score based models work.

Score-based generative modeling with score matching + Langevin dynamics. Source: Generative Modeling by Estimating Gradients of the Data Distribution

Mathematically, given the data distribution , we perturb with Gaussian noise where to obtain a noise-perturbed distribution:

Then we train a network , known as Noise Conditional Score-Based Network (NCSN) to estimate the score function . The training objective is a weighted sum of Fisher divergences for all noise scales.

Score-based generative modeling through stochastic differential equations (SDE)

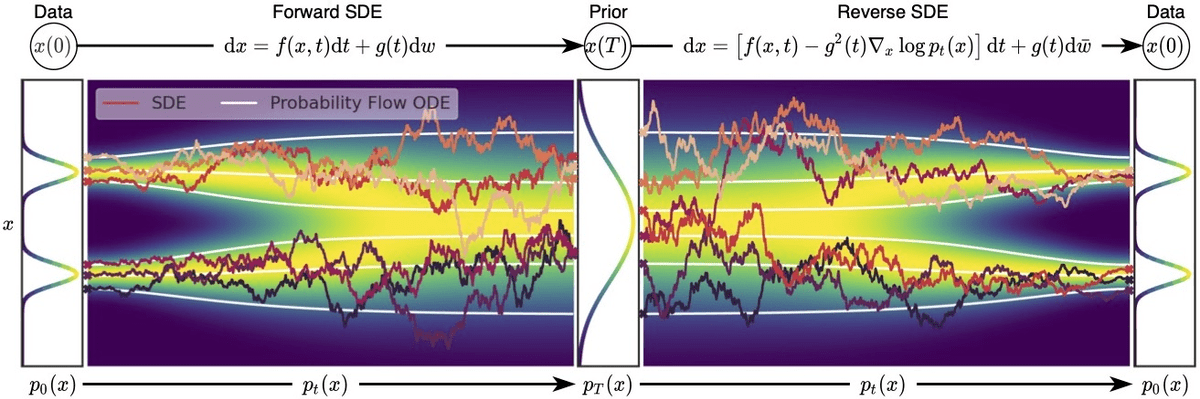

Song et al. 2021 explored the connection of score-based models with diffusion models. In an effort to encapsulate both NSCNs and DDPMs under the same umbrella, they proposed the following:

Instead of perturbing data with a finite number of noise distributions, we use a continuum of distributions that evolve over time according to a diffusion process. This process is modeled by a prescribed stochastic differential equation (SDE) that does not depend on the data and has no trainable parameters. By reversing the process, we can generate new samples.

Score-based generative modeling through stochastic differential equations (SDE). Source: Song et al. 2021

We can define the diffusion process as an SDE in the following form:

where is the Wiener process (a.k.a., Brownian motion), is a vector-valued function called the drift coefficient of , and is a scalar function known as the diffusion coefficient of . Note that the SDE typically has a unique strong solution.

To make sense of why we use an SDE, here is a tip: the SDE is inspired by the Brownian motion, in which a number of particles move randomly inside a medium. This randomness of the particles’ motion models the continuous noise perturbations on the data.

After perturbing the original data distribution for a sufficiently long time, the perturbed distribution becomes close to a tractable noise distribution.

To generate new samples, we need to reverse the diffusion process. The SDE was chosen to have a corresponding reverse SDE in closed form:

To compute the reverse SDE, we need to estimate the score function . This is done using a score-based model and Langevin dynamics. The training objective is a continuous combination of Fisher divergences:

where denotes a uniform distribution over the time interval, and is a positive weighting function. Once we have the score function, we can plug it into the reverse SDE and solve it in order to sample from the original data distribution .

There are a number of options to solve the reverse SDE which we won’t analyze here. Make sure to check the original paper or this excellent blog post by the author.

Overview of score-based generative modeling through SDEs. Source: Song et al. 2021

Summary

Let’s do a quick sum-up of the main points we learned in this blogpost:

-

Diffusion models work by gradually adding gaussian noise through a series of steps into the original image, a process known as diffusion.

-

To sample new data, we approximate the reverse diffusion process using a neural network.

-

The training of the model is based on maximizing the evidence lower bound (ELBO).

-

We can condition the diffusion models on image labels or text embeddings in order to “guide” the diffusion process.

-

Cascade and Latent diffusion are two approaches to scale up models to high-resolutions.

-

Cascade diffusion models are sequential diffusion models that generate images of increasing resolution.

-

Latent diffusion models (like stable diffusion) apply the diffusion process on a smaller latent space for computational efficiency using a variational autoencoder for the up and downsampling.

-

Score-based models also apply a sequence of noise perturbations to the original image. But they are trained using score-matching and Langevin dynamics. Nonetheless, they end up in a similar objective.

-

The diffusion process can be formulated as an SDE. Solving the reverse SDE allows us to generate new samples.

Finally, for more associations between diffusion models and VAE or AE check out these really nice blogs.

Cite as

@article{karagiannakos2022diffusionmodels,

title = "Diffusion models: toward state-of-the-art image generation",

author = "Karagiannakos, Sergios, Adaloglou, Nikolaos",

journal = "https://theaisummer.com/",

year = "2022",

howpublished = {https://theaisummer.com/diffusion-models/},

}

References

[1] Sohl-Dickstein, Jascha, et al. Deep Unsupervised Learning Using Nonequilibrium Thermodynamics. arXiv:1503.03585, arXiv, 18 Nov. 2015

[2] Ho, Jonathan, et al. Denoising Diffusion Probabilistic Models. arXiv:2006.11239, arXiv, 16 Dec. 2020

[3] Nichol, Alex, and Prafulla Dhariwal. Improved Denoising Diffusion Probabilistic Models. arXiv:2102.09672, arXiv, 18 Feb. 2021

[4] Dhariwal, Prafulla, and Alex Nichol. Diffusion Models Beat GANs on Image Synthesis. arXiv:2105.05233, arXiv, 1 June 2021

[5] Nichol, Alex, et al. GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models. arXiv:2112.10741, arXiv, 8 Mar. 2022

[6] Ho, Jonathan, and Tim Salimans. Classifier-Free Diffusion Guidance. 2021. openreview.net

[7] Ramesh, Aditya, et al. Hierarchical Text-Conditional Image Generation with CLIP Latents. arXiv:2204.06125, arXiv, 12 Apr. 2022

[8] Saharia, Chitwan, et al. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding. arXiv:2205.11487, arXiv, 23 May 2022

[9] Rombach, Robin, et al. High-Resolution Image Synthesis with Latent Diffusion Models. arXiv:2112.10752, arXiv, 13 Apr. 2022

[10] Ho, Jonathan, et al. Cascaded Diffusion Models for High Fidelity Image Generation. arXiv:2106.15282, arXiv, 17 Dec. 2021

[11] Weng, Lilian. What Are Diffusion Models? 11 July 2021

[12] O’Connor, Ryan. Introduction to Diffusion Models for Machine Learning AssemblyAI Blog, 12 May 2022

[13] Rogge, Niels and Rasul, Kashif. The Annotated Diffusion Model . Hugging Face Blog, 7 June 2022

[14] Das, Ayan. “An Introduction to Diffusion Probabilistic Models.” Ayan Das, 4 Dec. 2021

[15] Song, Yang, and Stefano Ermon. Generative Modeling by Estimating Gradients of the Data Distribution. arXiv:1907.05600, arXiv, 10 Oct. 2020

[16] Song, Yang, and Stefano Ermon. Improved Techniques for Training Score-Based Generative Models. arXiv:2006.09011, arXiv, 23 Oct. 2020

[17] Song, Yang, et al. Score-Based Generative Modeling through Stochastic Differential Equations. arXiv:2011.13456, arXiv, 10 Feb. 2021

[18] Song, Yang. Generative Modeling by Estimating Gradients of the Data Distribution, 5 May 2021

[19] Luo, Calvin. Understanding Diffusion Models: A Unified Perspective. 25 Aug. 2022

* Disclosure: Please note that some of the links above might be affiliate links, and at no additional cost to you, we will earn a commission if you decide to make a purchase after clicking through.