I was lucky and privileged enough to attend the ICCV 2023 conference in Paris. After collecting papers and notes I decided to share my notes along with my favourite ones. Here are the best papers picked out along with their key ideas. If you like my notes below, share them on social media!

Towards understanding the connection between generative and discriminative learning

Key idea: A very new trend that I am extremely excited about is the connection between generative and discriminative modeling. Is there any shared representation between them?

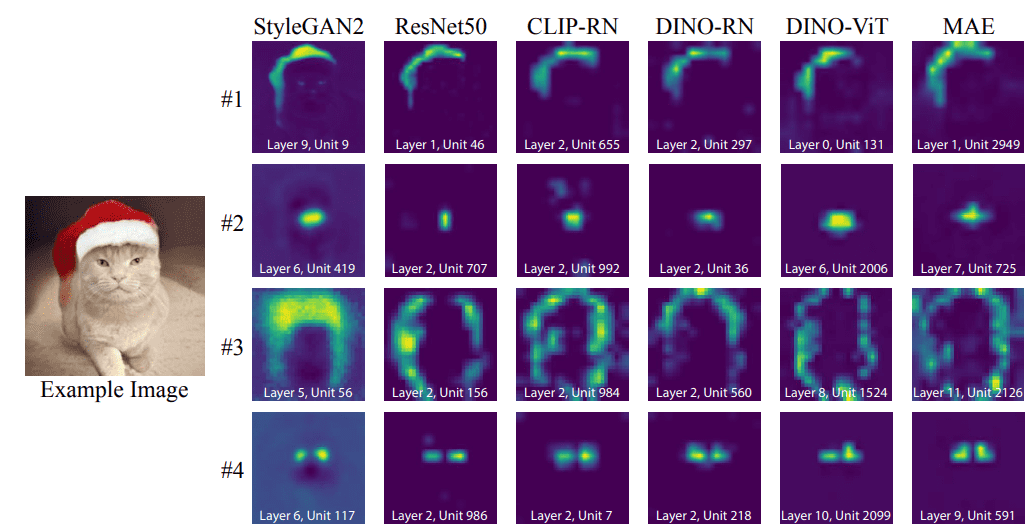

The authors demonstrate the existence of matching neurons (rosetta neurons) across different models that express a shared concept (such as object contours, object parts, and colors). These concepts emerge without any supervision or manual

annotations.Source

Yes! The paper “Rosetta Neurons: Mining the Common Units in a Model Zoo” showed that completely different models pretrained with completely different objectives learn shared concepts (such as object contours, object parts, and colors). These concepts emerge without any supervision or manual annotations. I had only seen object-related concepts emerge on the self-attention maps of self-supervised vision transformers such as DINO so far. They further show that the activations look similar, even for StyleGAN2.

The process can be briefly described as follows: 1) use trained generative model to produce images, 2) feed the image into a discriminative model and store all activation maps from all layers, 3) compute Pearson correlation averaged over images and spatial dimensions, 4) find mutual nearest neighbors between all activations of the two models, 5) cluster them.

Pre-pretraining: Combining visual self-supervised training with natural language supervision

Motivation: The masked autoencoder (MAE) randomly masks 75% of an image and trains the model to reconstruct the masked input image by minimizing the pixel reconstruction error. MAE has only been shown to scale with model size on ImageNet.

On the other hand, weakly supervised learning (WSL) meaning natural language supervision has a text description for each image. WSL is a middle-ground between supervised and self-supervised pretraining, where text annotations are used, such as CLIP.

Key idea: While MAE thrives in dense vision tasks like segmentation, WSL learns abstract features and has a remarkable zero-shot performance. Can we find a way to get the best of both worlds?

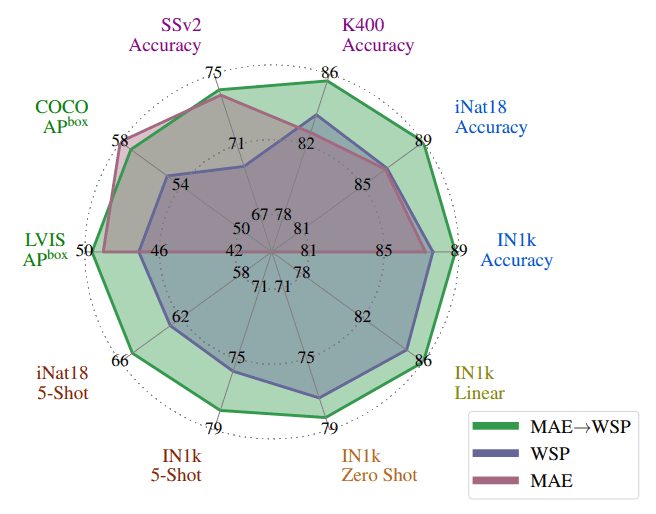

MAE pre-pretraining improves performance. Transfer performance of a ViT-L architecture trained with self-supervised

pretraining (MAE), weakly supervised pretraining on billions of images (WSP), and our pre-pretraining (MAE –> WSP) that initializes

the model with MAE and then pretrains with WSP. Pre-pretraining

consistently improves performance. Source

Meta AI shows that it is possible in their work “The effectiveness of MAE pre-pretraining for billion-scale pretraining”.

Key idea: Combine MAE self-supervised (1st stage → pre-pretraining) and weakly-supervised learning (2nd stage pretraining). This combination called MAE→WSP outperforms using either strategy in isolation, i.e., an MAE model or a weakly supervised model trained from scratch.

Adapting a pre-trained model by refocusing its attention

Since foundational models are the way to go, finding clever ways to adapt them to various downstream tasks is a critical research avenue.

Researchers from UC Berkeley and Microsoft Research show that it can be achieved by a TOp-down Attention STeering (TOAST) approach in their paper “TOAST: Transfer Learning via Attention Steering”.

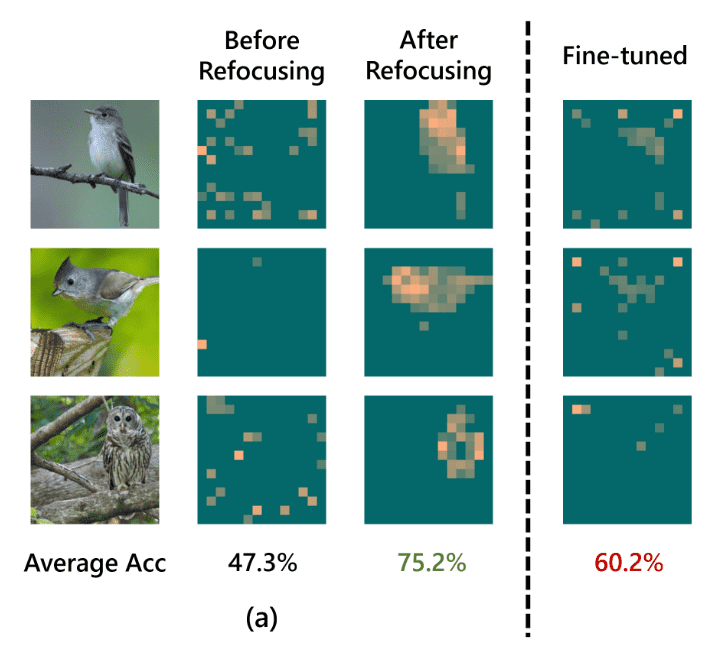

Key idea: Given a pretrained ViT backbone, they tune the additional linear layers of their method that act as feedback paths after the 1st forward pass. As such the model can redirect its attention to the task-relevant features and as shown below it can outperform standard fine-tuning (75.2 VS 60.2% accuracy).

An ImageNet pre-trained ViT is used for downstream bird classification using different transfer learning algorithms. Here they visualize the attention maps of these models. Each

attention map is averaged across different heads in the last layer of ViT. Source

Intuitively, the top-down signals (after the 1st feedforward pass) will select and propagate the task-relevant features in each layer, and the 2nd feedforward will have access to those enhanced features, achieving stronger performance.

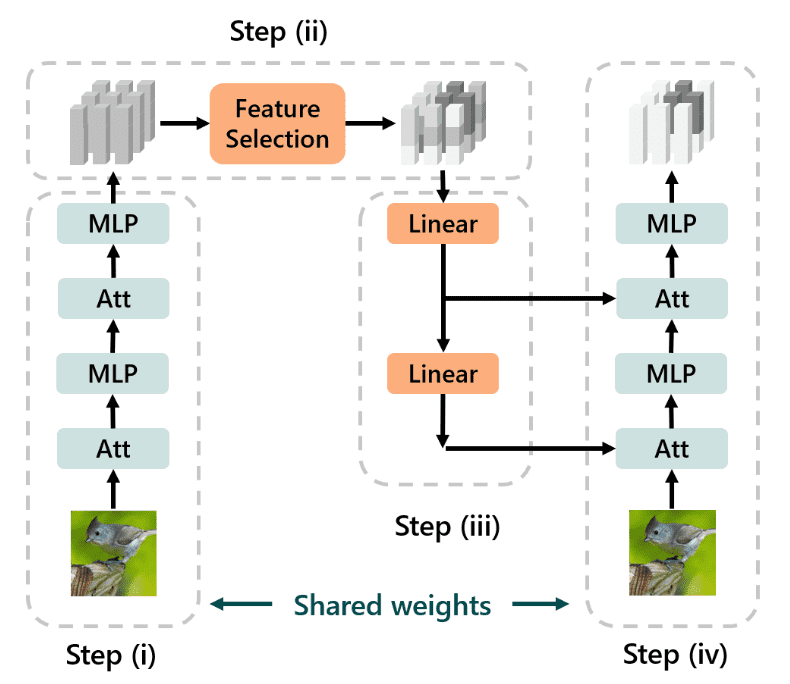

Inference has four steps: (i) the input goes through the feedforward

transformer, (ii) the output tokens are softly reweighted by the feature selection module based on

their relevance to the task, (iii) the reweighted tokens are sent back through the feedback path, and

(iv) we run the feedforward pass again but with each attention layer receiving additional top-down

inputs. During the transfer, we only tune the features selection module and the feedback path and

keep the feedforward backbone frozen. Source:TOAST

If you are interested to learn more about top-down attention the same group has published similar work in CVPR.

Image and video segmentation using discrete diffusion generative models

Google DeepMind presented an intriguing work called “A Generalist Framework for Panoptic Segmentation of Images and Videos”.

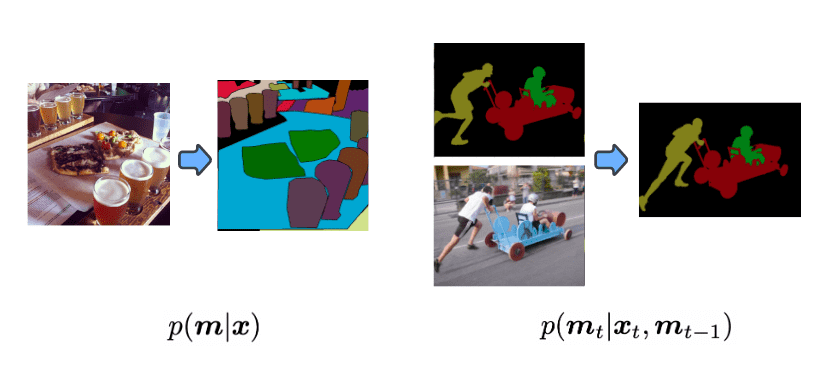

Key idea: A diffusion model is proposed to model panoptic segmentation masks, with a simple architecture and generic loss function. Specifically for segmentation, we want the class and the instance ID, which are discrete targets. For this reason, the infamous Bit Diffusion was used.

“Bit Diffusion first converts integers representing discrete tokens into bit-strings, the bits of which are then cast as real numbers (a.k.a., analog bits) to which continuous diffusion models can be applied. To draw samples, Bit Diffusion uses a conventional sampler from continuous diffusion, after which a final quantization step (simple thresholding) is used to obtain the categorical variables from the generated analog bits.” ~ Chen et al.

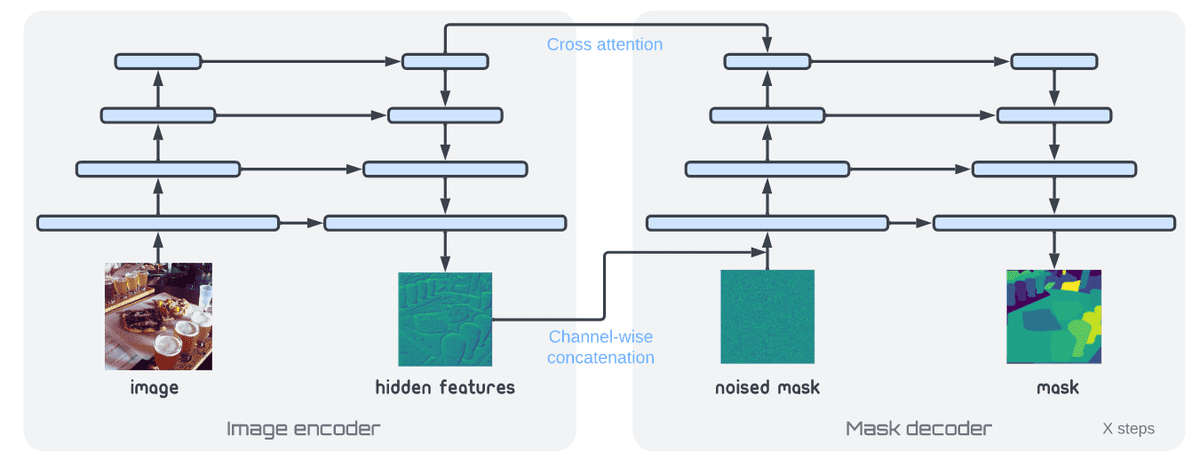

The architecture for the proposed panoptic mask generation framework. We separate the model into image encoder and mask decoder so that the iterative inference at test time only involves multiple passes over the decoder. Source

The diffusion model is pretrained unconditionally to produce the segmentation mask and then the pretrained image encoder plus the diffusion model are jointly trained for conditional segmentation.

Crucially, by simply adding past predictions as a conditioning signal, our method is capable of modeling video (in a streaming setting) and thereby learns to track object instances automatically.

The authors formulate panoptic segmentation as a conditional discrete mask (m) generation problem for images (left) and videos (right), using a Bit Diffusion generative model. Source

Amazingly, it works out of the box. The model automatically learns to track and segment instances across frames when incorporating the past-conditional generation.

This approach performs inferior to task-specific approaches, but given that both architecture and loss functions are task-agnostic the results are impressive.

Diffusion models for stochastic segmentation

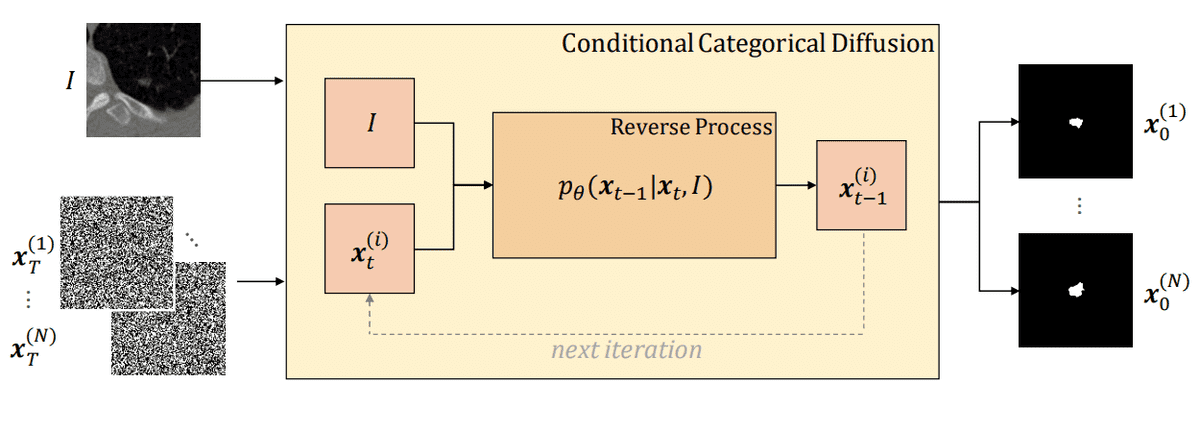

In a proximal work, researchers from the University of Bern showed that categorical diffusion models can used for stochastic image segmentation in their work titled “Stochastic Segmentation with Conditional Categorical Diffusion Models”.

Illustration of the reverse process of our method. The conditional categorical diffusion model (CCDM) receives as input an image I and a categorical label map sampled from the categorical uniform noise. Source

If you want to learn more about categorical diffusion, here is a paper presentation from NeurIPS 2021.

Diffusion models: replacing the commonly-used U-Net with transformers

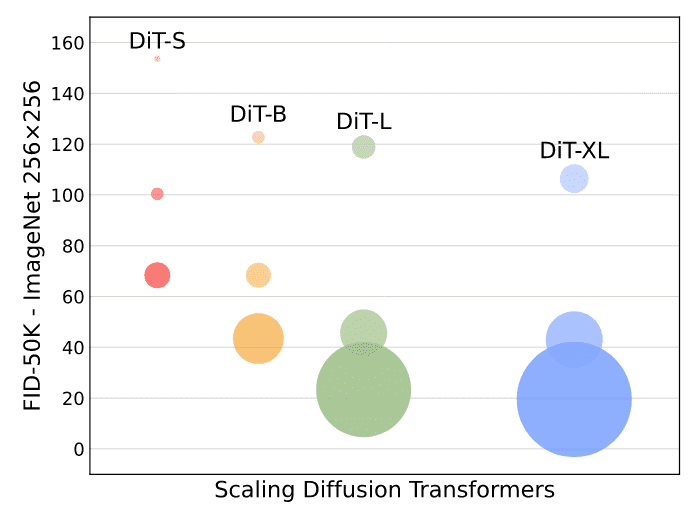

The paper “Scalable Diffusion Models with Transformers” shows that one can use transformers within the diffusion framework and obtain competitive performance on class-conditional ImageNet benchmarks up to 512×512 resolution.

The motivation behind this is that transformers/ViTs have the best practices and scaling performance and have been shown to scale more effectively for visual recognition than traditional convolutional networks, the main building block of U-nets in current diffusion models.

Key idea: In short, the authors show that by constructing and benchmarking the Diffusion Transformers (DiTs) design space under the Latent Diffusion Models (LDMs) framework, where diffusion models are trained within a VAE’s latent space, one can successfully replace the U-Net backbone with a transformer. They further show that DiTs are scalable architectures for diffusion models: there is a strong correlation between the network complexity (measured by Gflops) vs. sample quality (measured by FID).

ImageNet generation with Diffusion Transformers (DiTs). Bubble area indicates the flops of the diffusion model. Left:

FID-50K (lower is better) of our DiT models at 400K training iterations. Performance steadily improves in FID as model flops increase.Source

Diffusion Models as (Soft) Masked Autoencoders

Sander Dieleman has already talked about the connection between diffusion models and denoising autoencoders (excluding bottleneck, and including the multiple noise levels) in this blog post.

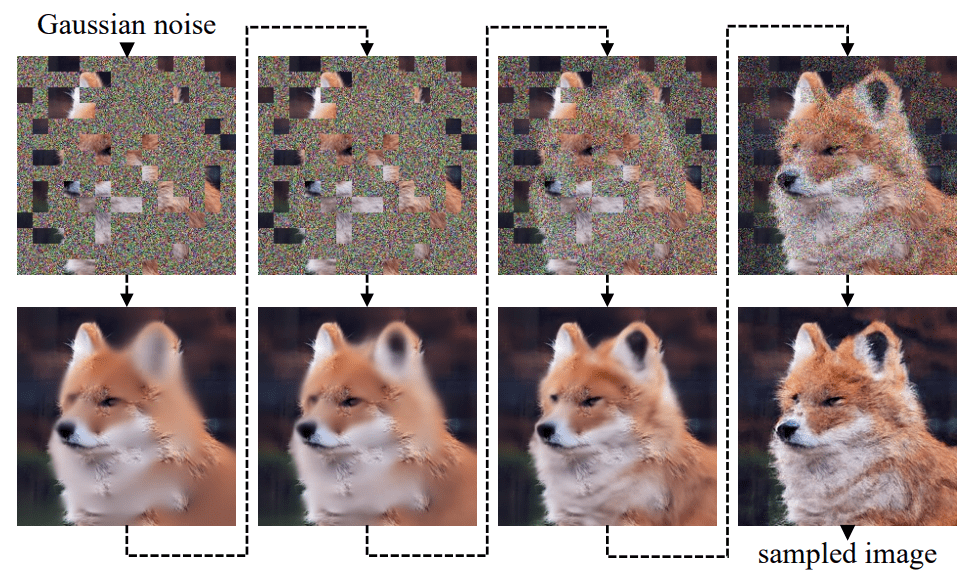

Key idea: In this direction, the paper Diffusion Models as Masked Autoencoders proposes conditioning diffusion models on patch-based masked input. Typically the noising was taking place pixel-wise in standard diffusion, which can be regarded as soft pixel-wise masking.

On the other hand, the masked autoencoder was receiving masked pixels, a type of hard masking as pixels are simply zeroed. By combining those two, the authors formulate diffusion models as masked autoencoders (DiffMAE).

Inference process of DiffMAE, which iteratively unfolds from random Gaussian noise to the sampled output. During training, the model learns to denoise the input at different noise levels (from top row to the bottom) and simultaneously performs self-supervised pre-training for downstream recognition. Source

The encoded features can serve as an initialization for fine-tuning downstream tasks and produce state-of-the-art video classification accuracy. Notably, the decoder is larger than the MAE will some additional cross attentions/skip connections are used.

Denoising Diffusion Autoencoders as Self-supervised Learners

Visual representation learning is improving from all different directions such as supervised learning, natural language weakly supervised learning, or self-supervised learning. And from now on with Diffusion Models!

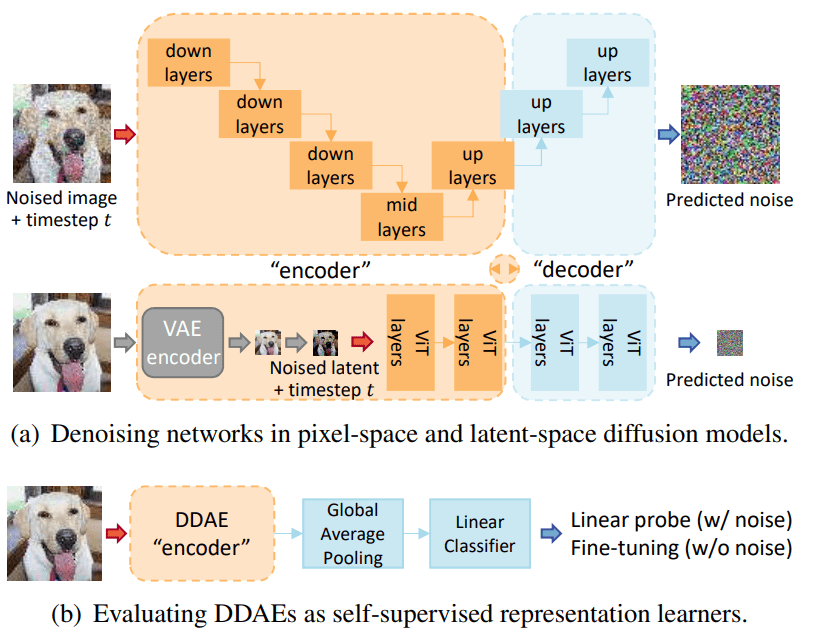

In a similar research direction to the Diffusion-MAE, the paper “Denoising Diffusion Autoencoders are Unified Self-supervised Learners” found that even the standard unconditional diffusion models can be leveraged for representation learning similar to self-supervised models.

Key idea: More concretely, by pre-training on unconditional image generation, diffusion models are already capturing linear-separable representations within their intermediate layers, without modifications.

Denoising Diffusion Autoencoders (DDAE). Top: Diffusion networks are essentially equivalent to level-conditional denoising autoencoders (DAE). The networks are named as DDAEs due to this similarity. Bottom: By linear probe evaluations, we confirm that DDAE can produce strong representations at some intermediate layers. Truncating and fine-tuning DDAE as vision encoders further leads to superior image classification performance. Source

This work is important as it unifies the previously unrelated fields of generative and discriminative learning. Limitations and important factors of this approach are that the feature quality heavily depends on layer depths and noising scales.

For example on CIFAR-10 the best features lie in the middle of the Unet decoded, when images are perturbed with small noises.

The authors indicate that training diffusion models are extremely costly and that best practices in discriminative representation learning (e.g. BYOL, DINO) may inspire advancements that will scale the training of diffusion models.

Leveraging DINO attention masks to the maximum

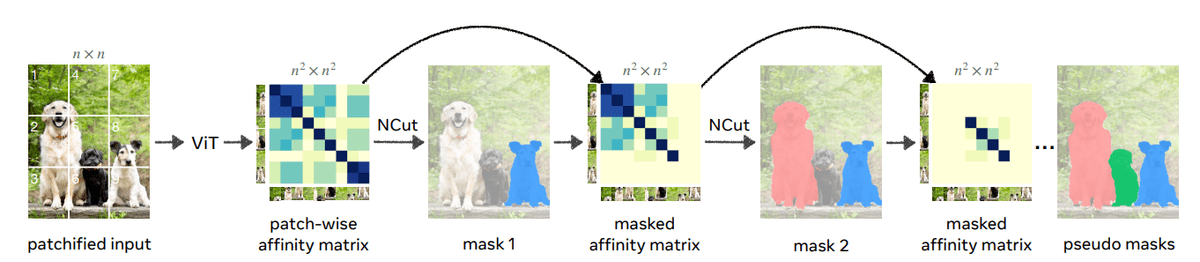

This work is amazing as it uses the attention masks from the self-supervised method DINO to perform zero-shot unsupervised object detection and even instance segmentation!

Key idea: They propose a simple framework called Cut-and-LEaRn (CutLER). They leverage the property of self-supervised models to ‘discover’ objects without supervision (in their attention maps). They post-process those masks to train a state-of-the-art localization model without any human labels. The post-processing is based on a classical computer vision algorithm called normalized graph cuts and it seems to generate very good masks.

Normalized Cuts (NCut) treats the image segmentation problem as a graph partitioning task. We construct a fully connected undirected graph by representing each image as a node. Each pair of nodes is connected by edges with weights Wij that measure the similarity of the connected nodes.

An illustration of how to discover multiple object masks in an image without supervision. The authors build upon previous works and create a patch-wise similarity matrix for the image using a self-supervised DINO model’s features. Subsequently they apply Normalized Cuts to this matrix and obtain a single foreground object mask of the image. They then mask out the affinity matrix values using the foreground mask and repeat the

process, which allows the algorithm to discover multiple object masks in a single image. In this pipeline illustration this process is repeated 3 times.

Then a detector is trained with these masks, while self-training further improves the performance.

Generative learning on images: can’t we do better than FID?

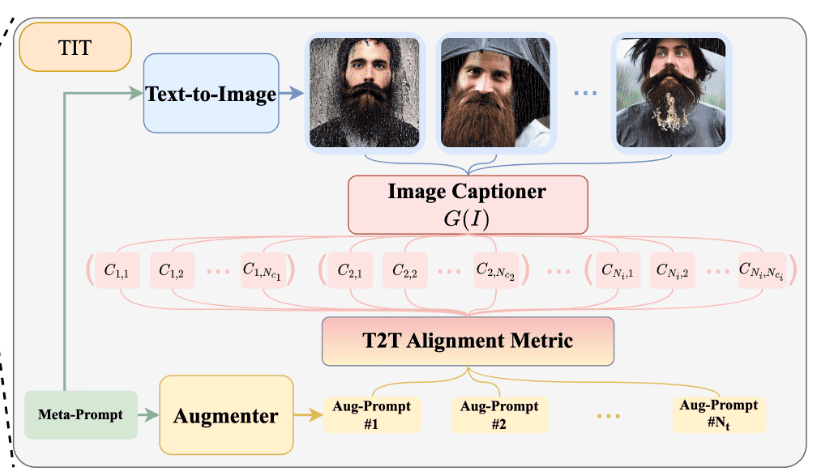

On the direction of alternative evaluations of generative models, I really like the approach from the paper “HRS-Bench: Holistic, Reliable and Scalable Benchmark for Text-to-Image Models” among other existing ones, mainly based on CLIP, and only applicable for text-conditional image generation.

Key idea: Measure image quality (fidelity) through text-to-text alignment using CLIP (the Image Captioner model G(I) in the figure below).

An example of an alternative to FID using CLIP and text to text similarity/alignment. Source

Examples of text-to-text scores include CIDEr and BLEU and they are well-established in the NLP literature. I am expecting more papers in this direction and for various types of conditions.

The paper has many more evaluations regarding generative models.

Note: Even though ImageBind and DINOv2 were not accepted papers in ICCV they were presented in the booth of Meta AI and they were heavily discussed during the week of the conference.

Meta AI has built an open-sourcing framework called ImageBind in their paper “ImageBind: One Embedding Space To Bind Them All”, the first AI model that brings together information coming from six different modalities in a single embedding space.

Key idea: The model learns a single embedding, or shared representation space, for text, images, audio, depth (3D), thermal (infrared radiation), and inertial measurement units (IMU). ImageBind creates a joint embedding space across multiple modalities without needing to train on data with every different combination of modalities.

How? Short answer: Using a transformer and a contrastive learning objective.

The shared embedding shape enables multi-modal retrieval, an amazing new tool. For instance, we can retrieve in the shared feature space sounds that are semantically close in the feature space with the photo. Imagine you have an image of the sea with waves and you can retrieve similar sounds such as the sound of the wave. Or even get a 3D shape from the depth sensor etc.

Traditionally there is a specific embedding (that is, vectors of numbers that can represent data and their relationships in machine learning) for each respective modality called specialist in this context. ImageBind can outperform prior specialist models trained individually for one particular modality, as described in our paper, as well as combine different forms of information.

DINOv2: Data curation matters for self-supervised learning + scaling up DINO/iBOT

I have covered a few times the approach of DINO in the blog and lectures.

Key idea: DINOv2 builds upon another framework called iBOT that combines cross-entropy from different augmented views with mask language modeling. They essentially find how to speed up training and scale to larger batch sizes.

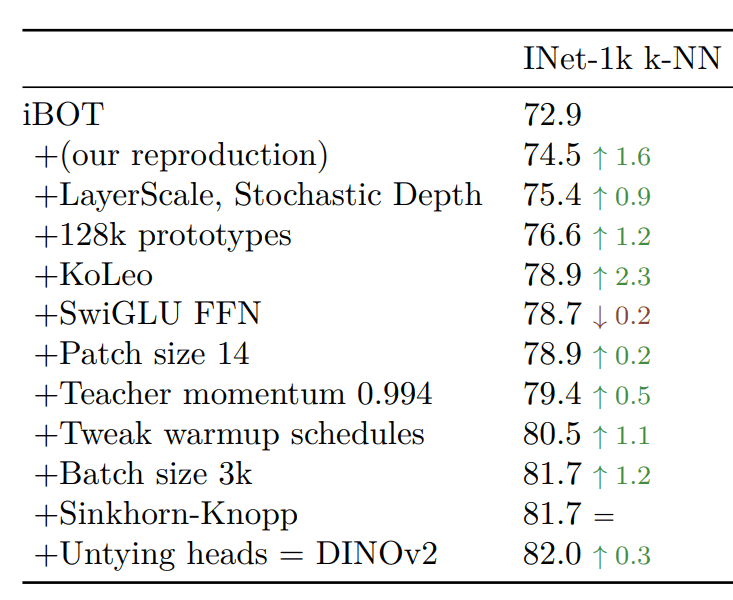

Ablation study for the ViT-Large architecture on ImageNet-22k using iBOT as a baseline. The authors choose k-NN classification performance to optimize the performance. Source

The second axis revolves around curating an unlabeled set of images for self-supervised learning. This is achieved with either k-means clustering (+sampling) on the feature space of a large ViT model pretrained on ImageNet-22K with DINOv2 or with simple k-nearest neighbors(NN) retrieval.

Miscalenous top 10 personal picks from ICCV2023

-

Sigmoid Loss for Language Image Pre-Training: An alternative of the contrastive objective used in CLIP for large-scale pretraining with larger batch sizes by avoiding the softmax normalization. The authors propose a simple pairwise sigmoid loss for image text pre-training. The new sigmoid-based loss operates solely on image-text pairs and does not require a global view of the pairwise similarities for normalization.

-

Distilling Large Vision-Language Model with Out-of-Distribution Generalizability: This paper investigates the distillation from vision-language models (teacher) into lightweight student models on small datasets, including open-vocabulary out-of-distribution (OOD) generalization. Contributions: (i) combines contrastive distillation (InfoNCE) loss between teacher and student with another modified version of mean squared error (MSE) which looks something like for better visual alignment (ii) by enriching the teacher’s language representations with informative and fine-grained semantic text-based attributes to effectively distinguish between different labels.

-

Keep It SimPool: Who Said Supervised Transformers Suffer from Attention Deficit?: a simple attention-based pooling mechanism as a replacement of the default one for both convolutional and transformer encoders that work for supervised and self-supervised learning approaches. SimPool improves performance on pre-training and downstream tasks and provides high-quality attention maps delineating object boundaries in all cases.

-

Unified Visual Relationship Detection with Vision and Language Models: This work focuses on training a single visual relationship detector (Unified Visual Relationship Detection by leveraging vision and language models) to predict the union of label spaces from multiple datasets. It tackles the issue of merging labels coming from different datasets using the second-order visual semantics between pairs of objects.

-

An Empirical Investigation of Pre-trained Model Selection for Out-of-Distribution Generalization and Calibration: highlight the importance of pre-trained model selection for out-of-distribution generalization. Imagenet-trained supervised ConvNeXt generally outperforms the other considered models. A correlation between in-distribution and OOD generalization does not always adhere to a linear increasing pattern, and the choice of dataset heavily influences it.

-

Discovering prototypes for dataset comparison: Allows comparing datasets by simply looking at the images belonging to the most frequently learned prototypes. How: Uses DINO on the concatenation of 2 (or more) datasets and aims to investigate the learned prototypes. By picking the most frequently utilized clusters after training, one can identify that belong to one of the datasets or datasets or datasets that can share similar semantic concepts.

-

Understanding the Feature Norm for Out-of-Distribution Detection: proposes the usage of feature norms multiplied by the sparsity as a generic metric that can be combined with k-NN distance for state-of-the-art OOD detection with ResNets/CNNs.

-

Benchmarking Low-Shot Robustness to Natural Distribution Shifts: 1) Self-supervised ViTs generally perform better than CNNs and the supervised counterparts on both ID and OOD shifts, but no single initialization or model size works better across datasets. 2) ImageNet-supervised ViT significantly outperforms ImageNet-21k supervised ViT on OOD shifts. 3) Existing robustness intervention methods can fail to improve robustness for datasets other than ImageNet.

-

Distilling from Similar Tasks for Transfer Learning on a Budget: Finds a scalar weight for each pretrained vision foundational model from a set of source models, by using task similarity metrics to estimate the alignment of each source model with the particular target task. For that, they assume that a small set of labeled data are available. The proposed task similarity metrics are independent of feature dimension, and they can therefore utilize models of any architecture. Based on the computed per-model weights one can take the best one for distillation of a combination of those models weighted by .

-

Leveraging Visual Attention for out-of-distribution Detection: A new out-of-distribution detection method that involves training a Convolutional Autoencoder to reconstruct attention heatmaps produced by a pretrained ViT classifier, enabling accurate image reconstruction and effective OOD detection.

Concluding thoughts

It was my first time at a conference. Certainly worth it when you want to catch up on the latest work in the field as arxiv preprints are impossible to track.

Here are some personal perspectives and summaries:

-

Diffusion models seem very promising candidates for much more than generating artistic pictures based on prompts, as I have previously thought.

-

Visual self-supervised learning and natural language supervision (weakly supervised learning) seem to be both useful and more approaches are expected to combine them rather than compare them.

-

Generalization still seems to be an unsolved issue while new datasets and benchmarks may be needed.

-

Foundational/pretrained models are the go-to method and from-scratch approaches seem rarer yet quite valuable.

-

Adapting the pretrained models on downstream tasks with minimal computing and for diverse distributions seems to be another key research direction.

-

It is still unclear why the attention of self-supervised models like DINO ViT leads to informative masks, while supervised models need specific mechanisms or attention-steering approaches.

* Disclosure: Please note that some of the links above might be affiliate links, and at no additional cost to you, we will earn a commission if you decide to make a purchase after clicking through.