If you follow DLabs.AI on LinkedIn, you might have noticed we publish a series there called ‘InsideAI.’ As we want as many readers to enjoy it as possible, we’ve now decided to post it on our blog too.

For the uninitiated: InsideAI is our monthly newsletter that captures the most interesting, surprising, and important news from the previous four weeks in the wonderful world of AI, so this month, it includes everything that caught our eye in February.

Ready for some shock and awe?!

Let’s dive in.

Is Facial Recognition Making the Innocent Look Guilty?

Facial recognition is a controversial technology. Many of us have heard stories of AI mistaking people for animals, but that’s just the tip of the iceberg. We’re now learning how law enforcement uses artificial intelligence to identify criminals. But when the technology gets it wrong, we’re not just seeing the possibility of embarrassment.

This case of mistaken identity is sending innocent people to jail — as was the case for one American citizen who stood accused of stealing a pair of socks from a TJ Maxx store despite him being by his wife’s side at the time of the crime as she went through labor.

Source: Wired

Spoiler alert: Data can reveal plot twists on Netflix

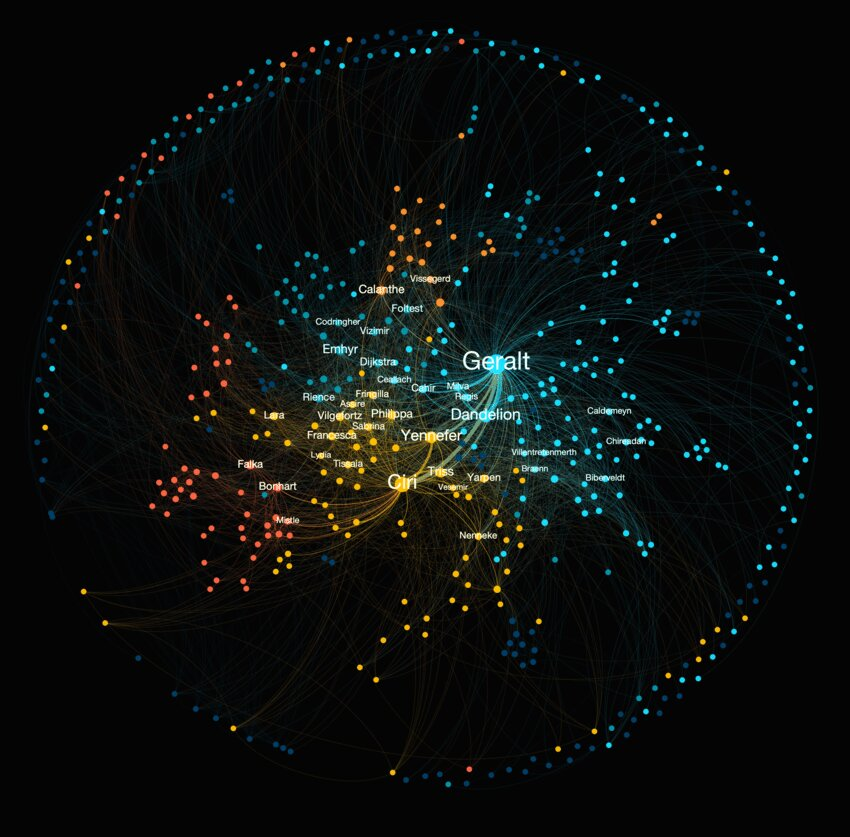

Some will say it needs no introduction… but just in case you haven’t heard of it, “The Witcher” is a fantasy novel series by Andrzej Sapkowski, which has also become a Netflix sensation. Still, we’re not here to give you Netflix recommendations, so why are we talking about this show?

Well — because Milán Janosov, a lead scientist at Datopolis, recently used his network science know-how to summarize the plot and map out the character relationships. He then published a visual network map, which traces the hidden patterns, storylines, and character connections, which you can see in the image below (but beware: if you look hard enough, you might just find some spoilers!).

The social map of The Witcher.

Source: Tech Xplore

Automating the administration of anesthetics

Anesthesiologists have their hands full in the operating theater. They not only have to keep patients adequately sedated. They need to check they remain immobile, experience no pain, are physiologically stable, and receive enough oxygen. So if there were a way to take some load off their shoulders, it would be a welcome reprieve.

And that’s what a new deep learning algorithm from researchers at Massachusetts General Hospital (MGH) and MIT can do. The algorithm automatically optimizes the dose of an anesthetic drug called propofol, giving anesthesiologists a little extra breathing space. But that’s not to say they can forget all about anesthetics. Just as with cruise control: the autopilot is there to help, but you still have to monitor the situation.

Source: MIT News

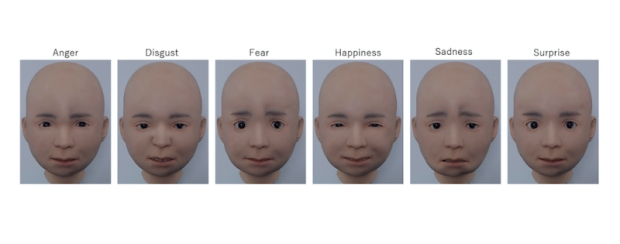

Meet Nikola: The ‘emotional’ android

If you asked us to name a key difference between a human and a robot, we’d probably say, ‘A human can show emotion.’ But the fact is: that difference is no more. Because while bots are still unlikely to feel real emotions, researchers from the RIKEN Guardian Robot Project based in Japan have created an android that can, on the surface, show emotion. Nikola (as the droid is affectionately known) can display six basic emotions: happiness, sadness, fear, anger, surprise, and disgust.

Here’s a challenge for you: cover the words above the images below, and see if you can guess the emotion expressed by Nikola in each photo.

Source: Science Daily

Source: Science Daily

Virtual eyes for a virtual world

Human eyes hide mounds of information. They show if we’re bored or excited. They give away what we’re looking at. They can even suggest if we’re an expert at a given task or if we’re fluent in a foreign language — and that’s why metaverse developers have long wanted access to human eyes to train their technology.

Thankfully, a company called EyeSyn has come up with a less eye-intensive solution. Using a program created by computer engineers at Duke University, developers can use a set of virtual eyes to simulate data well enough to train new metaverse applications, which is a relief to eyes in the real world.

Source: Tech Xplore

This furniture will only work in space

Scientists from MIT’s Computer Science and Artificial Laboratory (CSAIL) channeled their inner-kid in a recent experiment, re-imagining Lego with one key difference. They added electromagnetism to create a building block called an ElectroVoxel: a cube-shaped robot you can piece together into any shape imaginable.

Astronauts will use the innovation to solve the age-old problem of fitting furniture into tight spaces. And they’re excited by how these small blocks can piece together to create structures suited to various functions. The only shortcoming? Given how ElectroVoxels function, they are yet to be useful here on earth.

Source: Popular Science

As ever — we’d love to hear which story you enjoyed the most.

And don’t forget: to get the latest AI news delivered right to your inbox every month, sign up for our newsletter today.