Here you will find the best online courses, books and blogs to learn how to apply Deep Learning in Computer Vision applications.

Justin Johnson does a phenomenal job outlining all aspects of Deep Learning from a computer vision perspective. You will learn everything you need to know from fundamental concepts such as Backpropagation and Convolutional Neural Networks to Object Detection and Image segmentation. A must for beginners

Instructors: Justin Johnson

Topics that caught our eye:

-

3D Vision

-

Reinforcement Learning

-

Generative models

A part of the Deep Learning Specialization by Coursera, this course is designed to teach you everything about Convolutional Neural Networks and how they are applied in images and videos. You’ll start with the Foundations of Convolutional Neural Networks, then you’ll see some case studies, you’ll continue with Object Detection. Finally, you’ll dive into face recognition & Neural Style Transfer (NST).

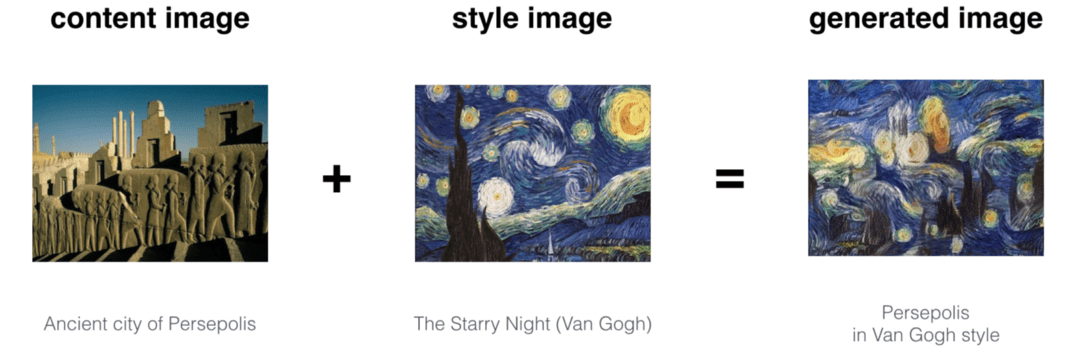

NST is an optimization technique used to take two images—a content image and a style reference image (such as an artwork by a famous painter)—and blend them together so the output image looks like the content image, but “painted” in the style of the style reference image.

Source:xpertup

Instructors: Andrew Ng, Younes Bensouda Mourri

Here we include two courses in one section because one is a continuation of the other. In particular, if you are interested only in Computer vision, focus on the following sub-courses:

As you may have guessed, you’ll learn how to solve real-world computer vision applications with Tensorflow. So be prepared for a thorough analysis of the framework and its intricacies.

Instructors: Laurence Moroney, Eddy Shyu

Disclosure: Please note that some of the links in this post might be affiliate links, and at no additional cost to you, we will earn a commission if you decide to make a purchase after clicking through.

This course focuses primarily on the fundamentals of Computer Vision and doesn’t spend much time on Deep Neural Networks. However, we think that one should always learn the basic principle before continuing into advanced concepts. So if you agree with us, you should definitely check out this course.

Example topics:

-

Camera models and view

-

Lighting

-

Tracking

-

Image motion

Instructors: Aaron Bobick, Irfan Essa, Arpan Chakraborty

CS231 is one of the most well-known courses in Computer Vision and probably one of the most comprehensive. The public video lectures on Youtube are from 2017 so it might feel a bit outdated at times but that doesn’t mean that it’s not extremely well-written and conceived. A big plus is the incredible notes that one can find on the course’s website.

Example lectures:

-

CNN architectures

-

Detection and Segmentation

-

Deep Reinforcement Learning

-

Adversarial examples and adversarial training

Instructors: Fei-Fei Li, Justin Johnson, Serena Yeung

Udacity’s main computer vision program is a hands-on course that combines theoretical concepts with practical tutorials and real-life projects. Intermediate knowledge in Python, statistics and machine learning is required. It has an admittedly high cost but it compensates with technical mentor support, a big student community, and personalized career services.

Projects:

-

Facial keypoint detection

-

Automatic image captioning

-

Landmark detection and tracking

Instructors: Sebastian Thrun, Jay Alammar, Luis Serrano

This course by Udemy has a twofold focus: it explores basic computer vision principles as well as advanced deep learning techniques. You will use NumPy, OpenCV, Tensorflow/Keras to solve a variety of practical problems.

Example lessons:

Instructors: Jose Portilla

Another course by Udemy, which deals heavily with deep learning architectures. You will learn about convolutional neural networks, single short detectors and generative adversarial networks.

Example lessons:

-

Face detection with OpenCV

-

Object detection with SSD

-

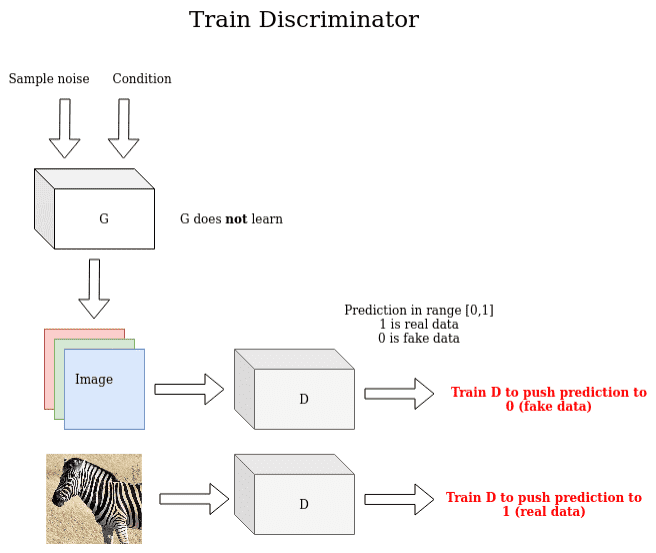

Image generation with Generative Adversarial Networks (GANs). GANs refer to two neural networks that play a min-max game throughout training (gradient ascend descend), namely the generator G and the Discriminator D. G’s input is random noise that is sampled from a distribution in a small range of real numbers. For image generation, G’s output will be a generated image. The main difference is that now we focus on generating representative examples of a specific distribution (i.e. dogs, paintings, street pictures, airplanes, etc.) The discriminator is nothing more than a binary classifier that focuses on learning the distribution of the class.

Image by Author

Instructors: Kirill Eremenko, Hadelin de Ponteves, Ligency Team

A 480 pages book that covers everything you need to know about modern computer vision systems. It is divided into 3 different parts: Deep Learning foundation, Image classification and detection, generative models and visual embeddings. A great choice for intermediate Python programmers and deep learning beginners.

Chapters that caught our interest:

-

Advanced CNN architectures: LeNet, AlexNet, VGGNet, Inception

-

You only look once (YOLO). The YOLO family of architectures are a series of deep learning models designed for fast object detection, developed by Joseph Redmon, et al.

-

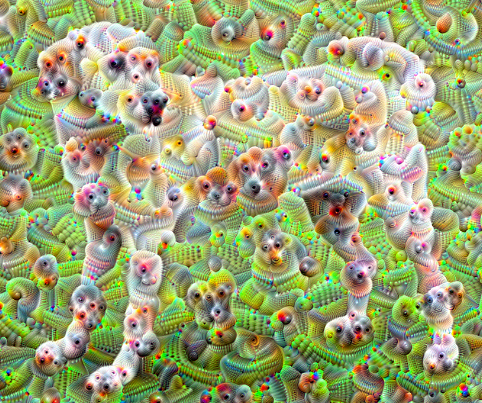

Deepdream and Neural Style Transfer. DeepDream is an experimental idea that aims to visualize neural network patterns. These patterns are learned by a neural network during training. In practice, DeepDream enhances the patterns it sees in an image.

-

Visual embeddings

An example of a deepdream image. Source: Tensorflow tutorials

Author: Mohamed Elgendy

Computer vision Blogs

Other Computer Vision Courses

The following list is based on the awesome Github page of @jbhuang0604:

* Disclosure: Please note that some of the links above might be affiliate links, and at no additional cost to you, we will earn a commission if you decide to make a purchase after clicking through.